The promise of an AI-powered future using Microsoft’s Open AI and Microsoft 365 Copilot has many in the corporate world struggling to gain access to early technology “bits” to assess the impact and productivity of this perceived paradigm shift. And, while some Microsoft Copilot-branded products are now available or soon to be released, e.g., Microsoft Sales for Copilot, much is still in private or limited preview.

How can organizations prepare for Microsoft 365 Copilot even though they have limited access to the technology? One fact is certain, when Microsoft 365 Copilot becomes more generally available, predicted for Q1/2024, many organizations will NOT be properly prepared for its rollout or general use.

Since AI is generated based on the relationship of Content + Rationalization, the accessibility of data or content within the corporate tenant will be a paramount part of the formula to produce quality results. However, what happens if confidential or sensitive data is unexpectedly evaluated to produce those results? This will result from the fact that Microsoft 365 Copilot relies heavily on the Microsoft Graph to perform its “magic”.

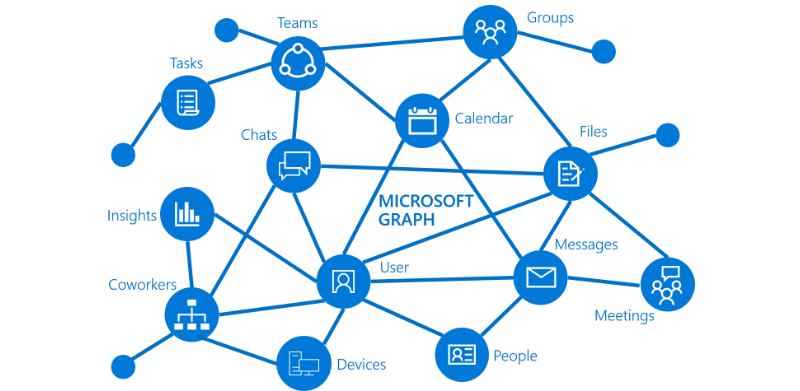

The Microsoft Graph represents object, user and permission associations within Microsoft 365. Note that the matrix consists of all the key elements of data and association, e.g. mail, tasks, users, messages, files, etc. Since the Microsoft Graph will be the primary “lens” through which Microsoft 365 Copilot interacts with data, it is crucial that users/requestors have access to only the information they need to perform their job function, no more, no less. Microsoft 365 Copilot will ONLY retrieve information each user explicitly has permission to access.

This discussion implies the use of the governance best practice of “Just Enough Permissions”, which refers to the principle of granting users only the minimum set of permissions required to perform their tasks. This is a common security practice to minimize the potential damage or risks that can arise from granting excessive permissions. Giving “just enough” permissions helps reduce the risk surface and close potential security vulnerabilities.

The converse idea would be that of “Oversharing”. In this situation, the opposite occurs through neglect, lack of data governance and the like. Oversharing can be especially vulnerable in the area of unmonitored External Guest Access.

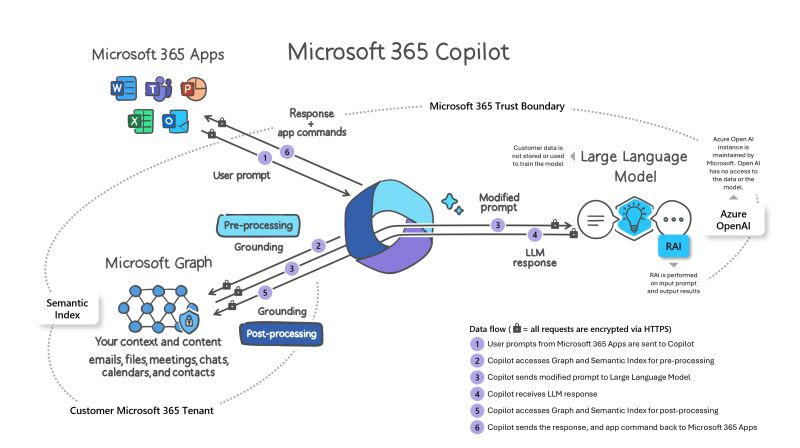

What might this look like in practice? As we see in the diagram below, Microsoft 365 Copilot consults the Microsoft Graph through multiple iterations (Pre-processing, Grounding, Post-Processing) as the content interacts with the Large Language Model, or LLM to produce the generative results that are sent back to the requesting Microsoft 365 applications:

In summary, if content can be “discovered” through the Microsoft Graph, it can become candidate material to produce an AI-generated result. The unintended consequences of not properly classifying and securing data can result in sensitive data “leaking” throughout the organization through generative AI.

Organizations can plan TODAY to avoid this high-risk scenario by properly identifying, governing, classifying and securing their corporate data. By leveraging tools like Microsoft Purview and Microsoft Syntex – SharePoint Advanced Management (SAM), organizations can get ahead of the Microsoft 365 Copilot adoption wave before it occurs by ensuring that users have access ONLY to the data they need to perform their work – and much more productively when Microsoft 365 Copilot becomes available.

How to prepare for Microsoft 365 Copilot – Microsoft Community Hub

Learn more about Microsoft 365 Copilot: The Copilot System: Explained by Microsoft and How Microsoft 365 Copilot works.